Preregistration and registered reports

Preregistration and registered reports

Preregistration

Several (questionable) research practices can lead to publication bias and false positive findings. Important examples of this kind of practices are p-hacking, HARKing (see below) and not publishing negative research results.

An effective solution is to define the research questions and analysis plan before observing the research outcomes – a process called preregistration. As such, preregistration helps the researcher to interpret the data and focus on the original research question (what can and cannot be concluded), to increase transparency when reporting research, and prevent from falling into questionable research practices. As a consequence, the focus of the work shifts from having ‘nice’ results to having ‘accurate’ results.

What is preregistration about?

When preregistering a study, the researcher (or research team) determines the plan of the research to be performed before starting the data collection and/or data analysis. Basically, this plan contains:

- the research question

- the hypothesis

- the dependent and independent variables that will be collected

- the study design

- the planned methodology

- the sample size and the method to determine the sample size

- the way the data will be analyzed

- the outlier and data exclusion criteria

The plan is then timestamped and deposited into a registry. This does not necessarily mean that the plan has to be publicly available or that there is no flexibility in the analysis of the data after these have been obtained, nor that one should stop performing exploratory research. It should, however, be clear to the reader of the article which parts of the research are hypothesis testing and which are unexpected findings that drive a new hypothesis. Furthermore, any deviations from the preregistration should be made explicit and justified.

One of the organisations that provide the possibility to deposit and preregister research plans, protocols and data is the Open Science Framework. OSF is a free, open platform to support research and enable collaboration.

Preregistration is well taken up in the field of clinical trials. Here, more and more medical journals will only consider publication if the trial has been registered beforehand. Nowadays, preregistration is finding its way in psychology and into other fields as well.

HARKing (Hypothesizing After the Results are Known) refers to the practice of presenting a post hoc hypothesis (i.e. based on or informed by results) in a research report as if it were, in fact, an a priori hypothesis. This can be done in several ways, leading to several types of HARKing. For example: (1) by changing or replacing the initial hypothesis by a post hoc hypotheses after the researcher finds out the results; (2) by excluding a priori hypotheses that were not confirmed; (3) by retrieving hypotheses from a post hoc literature search and reporting them as a priori hypotheses.

In many cases researchers are motivated to HARKing by a research and publication culture that values confirmation of a priori hypotheses over post hoc hypotheses. In an attempt to make their results appear stronger and increase the likelihood of publication, initial insights and hypotheses are being HARKed. The temptation of HARKing can be eliminated through preregistration of study designs and analysis plans. Open data sharing can also guard from these practices. We will go into detail into these practices later on.

In a fishing expedition the collected data is analysed repeatedly to search for other, “any”, patterns than the ones supported by the initial hypothesis motivating the study (design). This kind of “exploratory research” on the same dataset is different from the exploratory research designed to answer the hypothesis. Findings from exploring the dataset for “any” existing patterns should be declared so, even (especially) when these results confirm an a priori hypothesis, as this kind of phishing is unacceptable and detrimental to research.

This kind of phishing, also called data snooping, increases the chance of getting false positive results: every dataset contains “spurious correlations”, popping up for your particular dataset by coincidence, but not existing for similar datasets. When a good (statistical) model is built, it should be validated with a new, similar dataset, whose data did not contribute to the building of the model. Otherwise, the model might be so fit for the data it was built for, that is too specific, becoming non-generalizable to similar datasets. The term cherry picking is also used, as in “picking only the best-looking cherries”, or the best looking results or observations. False positive findings, found through data snooping, can be unmasked by replication studies. Open data sharing can also guard from these practices. We will go into detail into these practices later on.

Registered reports

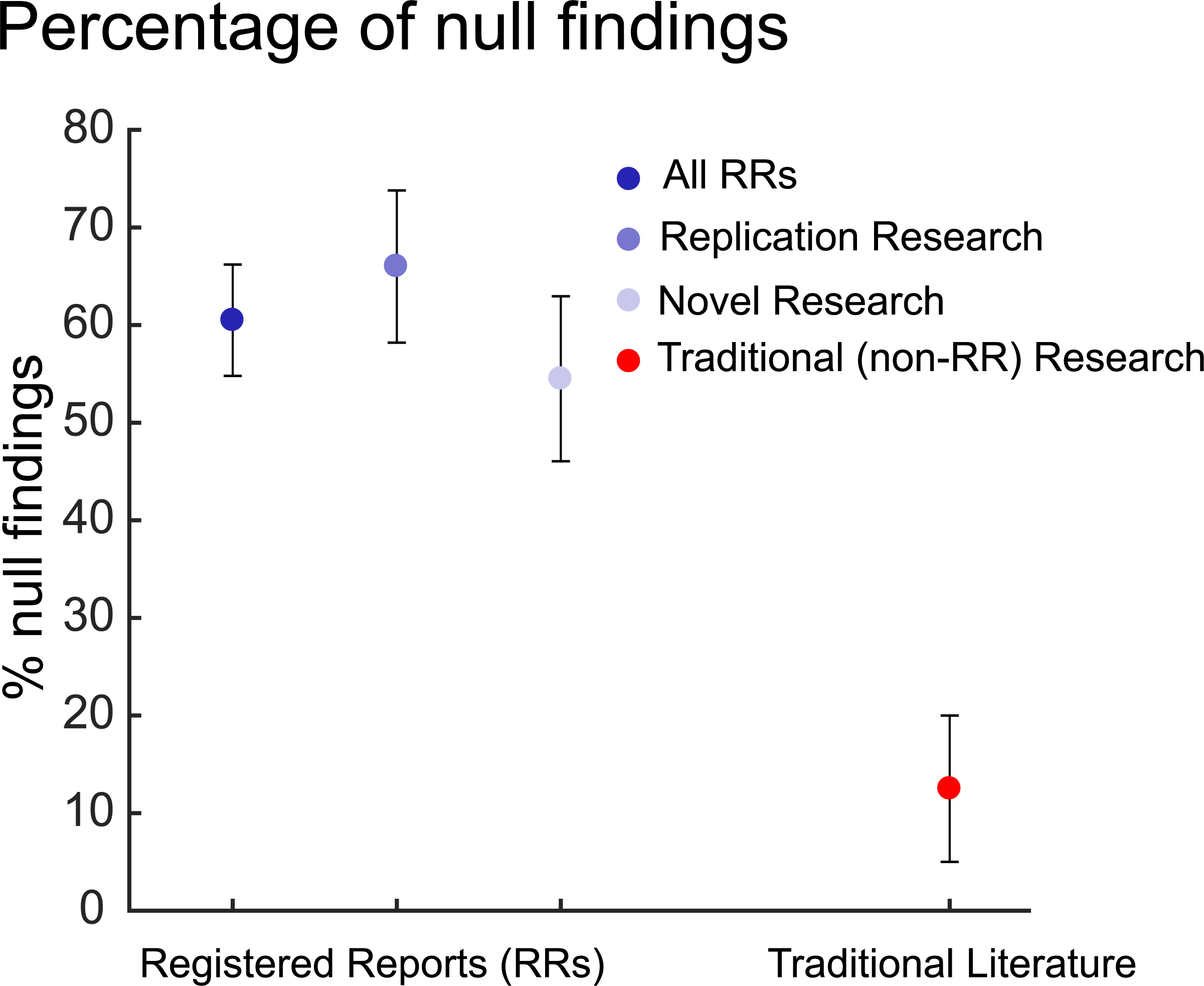

Registered reports are a more formalised way of looking at preregistration and are an even more powerful tool to prevent publication bias. Within this approach, the proposed plan is sent to a journal before the start of the study (figure 1). The journal subsequently sends the plan (not the results) for peer-review, provides feedback and in principles ommits itself to publish the study if conducted according to the submitted plan. One of the main advantages of this approach is that the study design is reviewed early on, allowing to intervene before starting the study. Moreover, in the form of a registered report, preregistration reduces publication bias as journals will publish the work, independent of the outcome (figure 2 and 3) and acknowledges the value of negative results.

Figure 1: Copied from Center for Open Science https://www.cos.io/initiatives/registered-reports available for reuse under a CC BY 4.0 license.

Figure 2: An excess of positive results: comparing the standard psychology literature with registered reports.

Copied from: Anne Scheel, Mitchell Schijen ,Daniel Lakens, PsyArXiv Preprints – https://psyarxiv.com/p6e9c/ – CC- By Attribution 4.0 International

Figure 3: Percentages of null findings among RRs and traditional (non-RR) literature, with their respective 95% confidence intervals.

Copied from: Christopher Allen, David M.A. Mehler – Open science challenges, benefits and tips in early career and beyond – PLOS Biology 2019 https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.3000246#pbio-3000246-g001

Not all journals provide the possibility to register a report but a list of journals that do can be found here:

(Publication) bias

Although often unintended, biases in analysing and interpreting the experimental data, and the hope to find positive/significant data, might distract from the original research question. In addition, as negative results are often (incorrectly) perceived to be of lower quality and not worth pursuing, this can bias which results are reported into the literature (publication bias). These practices reduce the credibility and replicability of research.